In this post we’ll understand the Golden Gate replication flow and examines each of the architectural components.

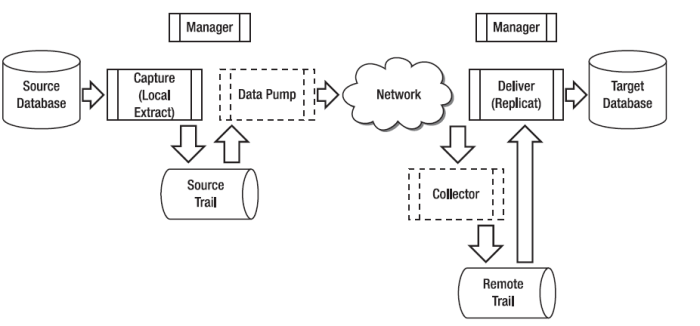

GoldenGate Replication Flow

The typical GoldenGate flow shows new and changed database data being captured from the source database. The captured data is written to a file called the source trail. The trail is then read by a data pump, sent across the network, and written to a remote trail file by the Collector process. The delivery function reads the remote trail and updates the target database. Each of the components is managed by the Manager process.

GoldenGate Components

From the above flow you can see there are following GoldenGate components:-

- Source Database

- Capture (Local Extract) Process

- Source Trail

- Data Pump

- Network

- Collector

- Remote Trail

- Delivery (Replicat)

- Target Database

- Manager

Now we’ll understand each and every component individually:-

SOURCE DATABASE

This is basically your Oracle Database from where you want to replicate your data.

Capture (Local Extract) Process

Capture is the process of extracting data that is inserted into, updated on, or deleted from the source database. In GoldenGate, the capture process is called the Extract. In this case, the Extract is called a Local Extract (sometimes called the Primary Extract) because it captures data changes from the local source database.

Extract is an operating-system process that runs on the source server and captures changes from the database redo logs (and in some exceptional cases, the archived redo logs) and writes the data to a file called the Trail File. Extract only captures committed changes and filters out other activity such as rolled-back changes. Extract can also be configured to write the Trail File directly to a remote target server, but this usually isn’t the optimum configuration. In addition to database data manipulation language (DML) data, you can also capture data definition language (DDL) changes and sequences using Extract if properly configured. You can use Extract to capture data to initially load the target tables, but this is typically done using DBMS utilities such as export/import or Data Pump for Oracle. You can configure Extract as a single process or multiple parallel processes depending on your requirements. Each Extract process can act independently on different tables. For example, a single Extract can capture all the changes for of the tables in a schema, or you can create multiple Extracts and divide the tables among the Extracts. In some cases, you may need to create multiple parallel Extract processes to improve performance, although this usually isn’t necessary. You can stop and start each Extract process independently.

You can set up Extract to capture an entire schema using wildcarding, a single table, or a subset of rows or columns for a table. In addition, you can transform and filter captured data using the Extract to only extract data meeting certain criteria. You can instruct Extract to write any records that it’s unable to process to a discard file for later problem resolution. And you can generate reports automatically to show the Extract configuration. You can set these up to be updated periodically at user-defined intervals with the latest Extract processing statistics.

Source Trail

The Extract process sequentially writes committed transactions as they occur to a staging file that GoldenGate calls a source trail. Data is written in large blocks for high performance. Data that is written to the trail is queued for distribution to the target server or another destination to be processed by another GoldenGate process, such as a data pump. Data in the trail files can also be encrypted by the Extract and then unencrypted by the data pump or delivery process. You can size the trail files based on the expected data volume. When the specified size is reached, a

new trail file is created. To free up disk space, you can configure GoldenGate to automatically purge trail files based on age or the total number of trail files. By default, data in the trail files is stored in a platform-independent, GoldenGate proprietary format. In addition to the database data, each trail file contains a file header, and each record also contains its own header. Each of the GoldenGate processes keeps track of its position in the trail files using checkpoints, which are stored in separate files.

Data Pump

The data pump is another type of GoldenGate Extract process. The data pump reads the records in the source trail written by the Local Extract, pumps or passes them over the TCP/IP network to the target, and creates a target or remote trail. Although the data pump can be configured for data filtering and transformation (just like the Local Extract), in many cases the data pump reads the records in the source trail and simply passes all of them on as is. In GoldenGate terminology, this is called passthru mode. If data filtering or transformation is required, it’s a good idea to do this with the data pump to reduce the amount of data sent across the network.

Network

GoldenGate sends data from the source trail using the Local or data pump Extract over a TCP/IP network to a remote host and writes it to the remote trail file. The Local or data pump Extract communicates with another operating-system background Extract process called the Collector on the target. The Collector is started dynamically for each Extract process on the source that requires a network connection to the target. The Collector listens for connections on a port configured for GoldenGate. Although it can be configured, often the Collector process is ignored because it’s started dynamically and does its job without requiring changes or intervention on the target. During transmission from the source to the target, you can compress the data to reduce bandwidth.

In addition, you can tune the TCP/IP socket buffer sizes and connection timeout parameters for the best performance across the network. If needed, you can also encrypt the GoldenGate data sent across the network from the source and automatically decrypt it on the target.

Collector

The Collector process is started automatically by the Manager as needed by the Extract. The Collector process runs in the background on the target system and writes records to the remote trail. The records are sent across the TCP/IP network connection from the Extract process on the source system (either by a data pump or a Local Extract process).

Remote Trail

The remote trail is similar to the source trail, except it is created on the remote server, which could be the target database server or some other middle tier server. The source trails and the remote trails are stored in a filesystem directory named dirdat by default. They are named with a two-character prefix followed by a six-digit sequence number. The same approach for sizing for the source trail applies to the remote trail. You should size the trail files based on the expected data volume. When the specified size is reached, a new trail file will be created. You can also configure GoldenGate to automatically purge the remote trail files based on age or the total number of trail files to free up disk space. Just like the source trail, data in the remote trail files is stored in platform-independent, GoldenGate-proprietary format. Each remote trail file contains a file header, and each record also contains its own header. The GoldenGate processes keep track of its position in the remote trail files using checkpoints, which are stored in separate GoldenGate files or optionally in a database table.

Delivery (Replicat)

Delivery is the process of applying data changes to the target database. In GoldenGate, delivery is done by a process called the Replicat using the native database SQL. The Replicat applies data changes written to the trail file by the Extract process in the same order in which they were committed on the source database. This is done to maintain data integrity. In addition to replicating database DML data, you can also replicate DDL changes and sequences using the Replicat, if it’s properly configured. You can configure a special Replicat to apply data to initially load the target tables, but this is typically done using DBMS utilities such as Data Pump for Oracle. Just like the Extract, you can configure Replicat as a single process or multiple parallel processes

depending on the requirements. Each Replicat process can act independently on different tables. For example, a single Replicat can apply all the changes for all the tables in a schema, or you can create multiple Replicats and the divide the tables among them. In some cases, you may need to create multiple Replicat processes to improve performance. You can stop and start each Replicat process independently. Replicat can replicate data for an entire schema using wildcarding, a single table, or a subset of rows or columns for a table. You can configure the Replicat to map the data from the source to the target database, transform it, and filter it to only replicate data meeting certain criteria. You can write any records that Replicat is unable to process to a discard file for problem resolution. Reports can be automatically generated to show the Replicat configuration; these reports can be updated periodically at user-defined intervals with the latest processing statistics.

Target Database

This is basically the Oracle Database where you want the changes to be replicated.

Manager

The GoldenGate Manager process is used to manage all of the GoldenGate processes and resources. A single Manager process runs on each server where GoldenGate is executing and processes commands from the GoldenGate Software Command Interface (GGSCI). The Manager process is the first GoldenGate process started. The Manager then starts and stops each of the other GoldenGate processes, manages the trail files, and produces log files and reports.

This is pretty much all the theory you need to know for Oracle GoldeGate, simple enough. right ?, that is the beauty of Golden Gate and thats why all other replication methodologies Oracle CDC and Oracle Streams have been de-supported from 12c and may be depricated in future releases, now for installing GoldenGate please go through the step by step instructions in my post (https://nitishanandsrivastava.wordpress.com/oracle-goldengate/installing-golden-gate/).

Related Articles:-

Oracle GoldenGate Architecture

Great post!

LikeLike

I am lucky that I detected this site, exactly the right information that I was searching for! .

LikeLike